Embodied Impressions

EXPERIENTIAL ART | This project was a part of my MFA in Design and Technology at the Parsons School of Design. An interactive model designed to invite exploration, this project aims to center the sense of creation as experienced in the process of creating.

The Goal

To use an interactive model to illustrate how the most important aspects of creation lie in the act of engaging in it, not the production of a measurable output; in other words - it’s about the process and the journey, not the product at the destination.

The Timeline

January-May 2023.

The Process

Ideation, conceptualisation, research, analysis, making, prototyping, coding, interaction design, experience design, testing, iteration, detailing, presentation.

Research Goals

My research goals evolved with the project. I started with brainstorming a design project that deals with the intersection of people, design, technology, and space.

I started with a broader intention of trying to explore human behavioral interpretations and abstract them in order to affect different perspectives. I aimed to create a visual feedback loop and examine how people responded and reacted to it, and what factors affected this behaviour.

Brainstorming

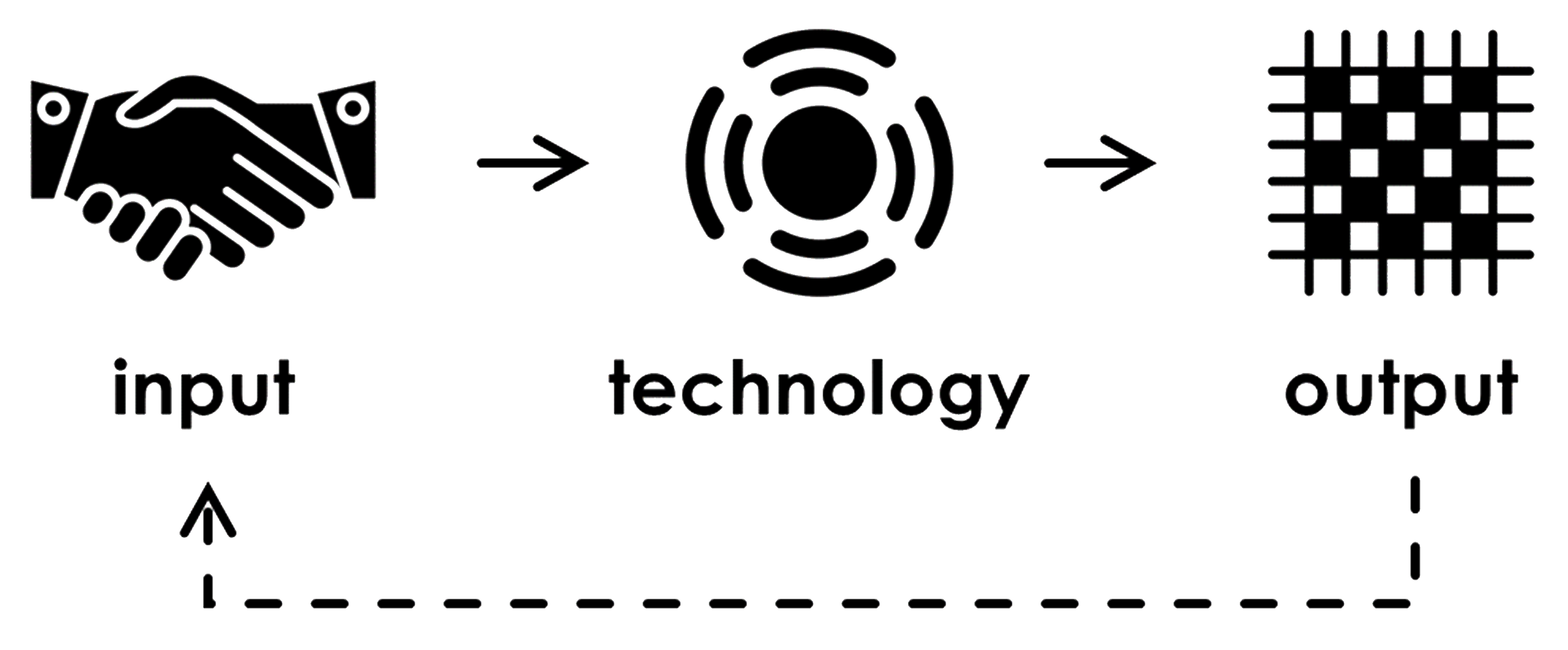

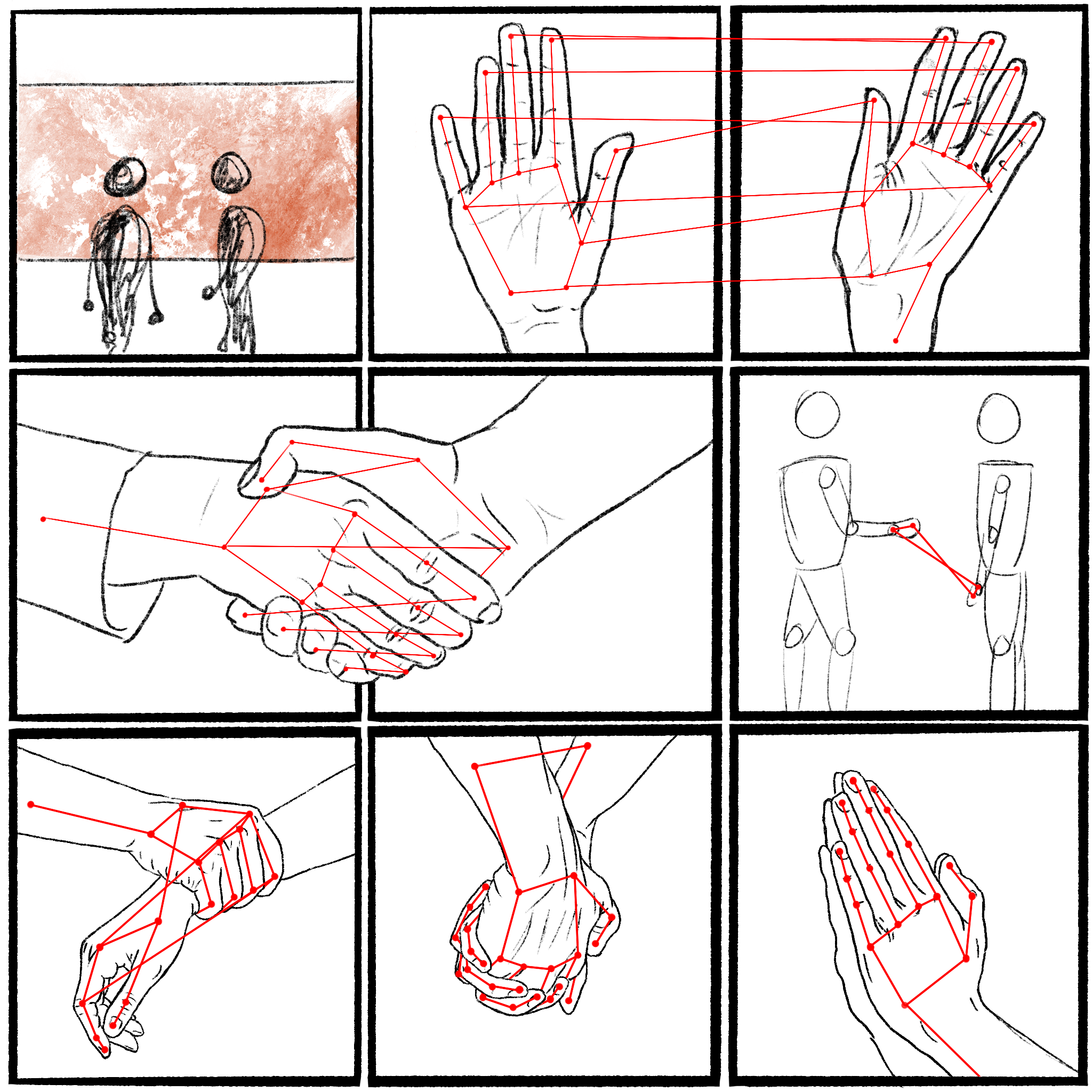

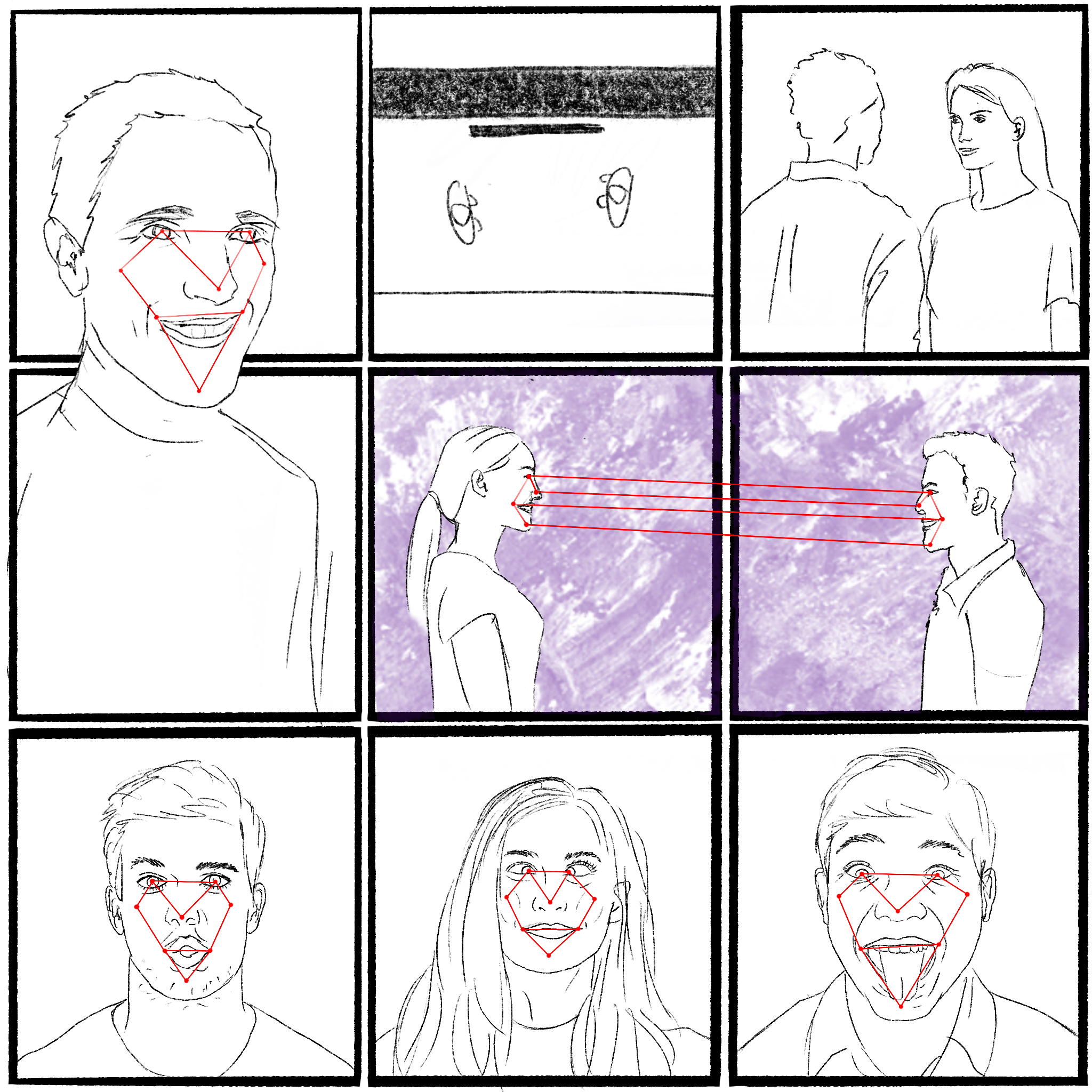

For the first stage, I divided my project into three components, which I then brainstormed and tested individually:

Input

I needed to figure out what kind of human action I needed to capture and process. I sketched and storyboarded ideas to visualise them.

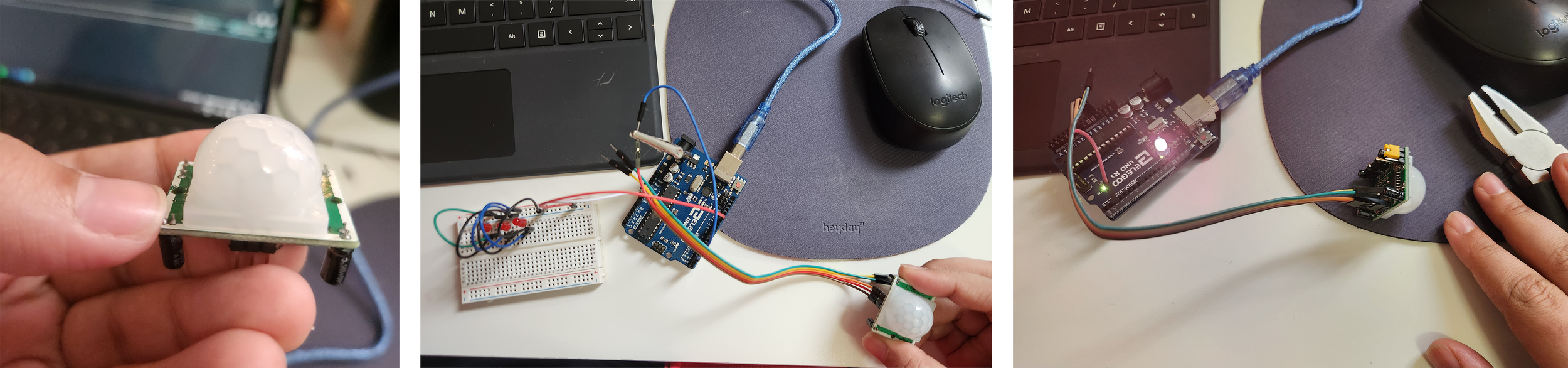

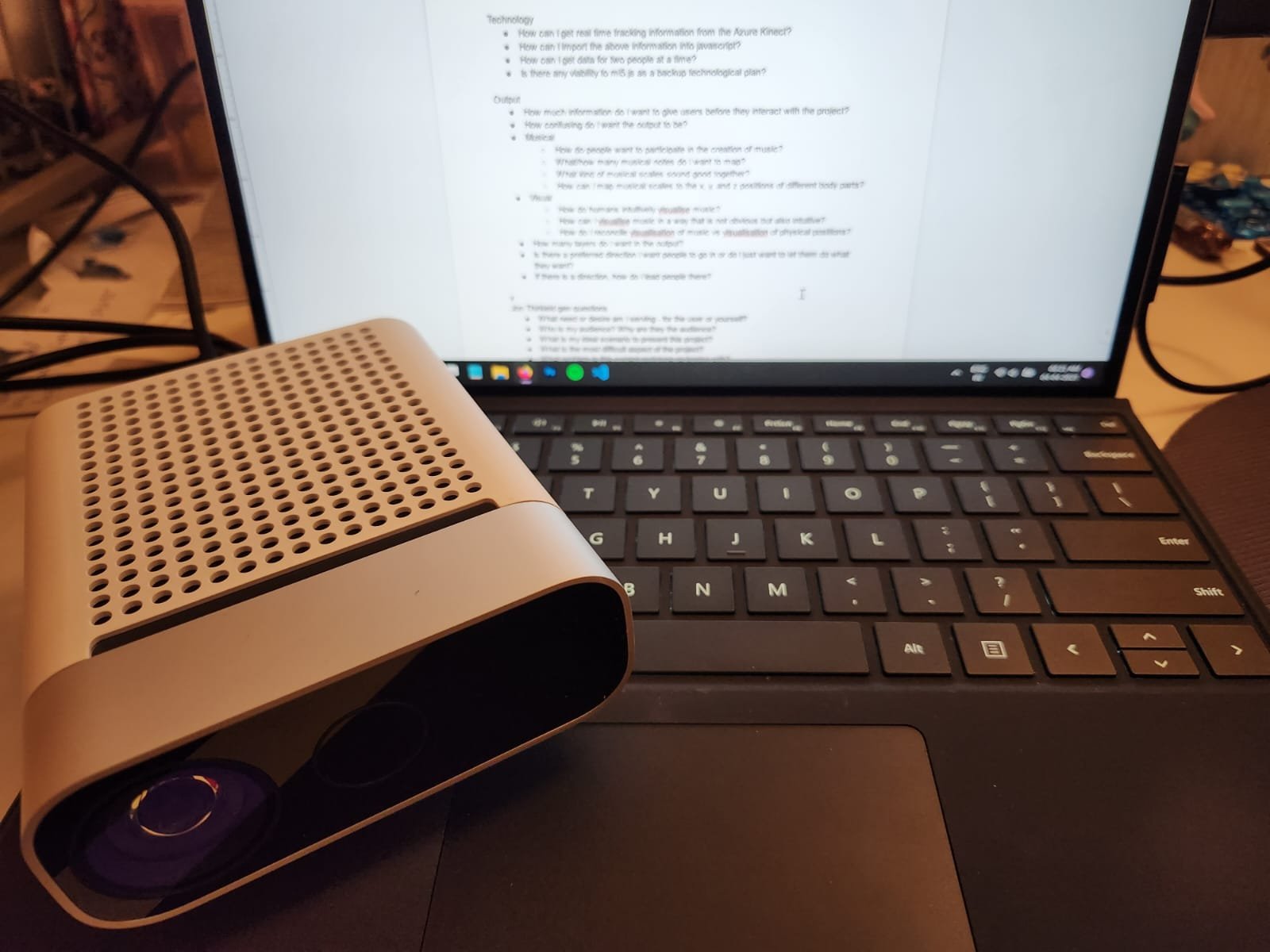

Technology

I tested a number of techniques before deciding which one worked best for my project - including, but not limited to, a Leap Motion Sensor, an Arduino, an XBOX Kinect, ml5.js, Motion Capture, and TouchDesigner. The process I used needed to capture information from the user and be able to translate it into workable variables.

Output

I created a bunch of sketches as I was exploring what kind of aesthetic and values I wanted for the on-screen output.

The sketching process led to the addition of audio output to the screen feedback. Audio provoked a more immediate and direct reaction from users.

Prototyping and Testing

As I started creating higher fidelity prototypes of my project, I started testing them with peers to observe and receive feedback.

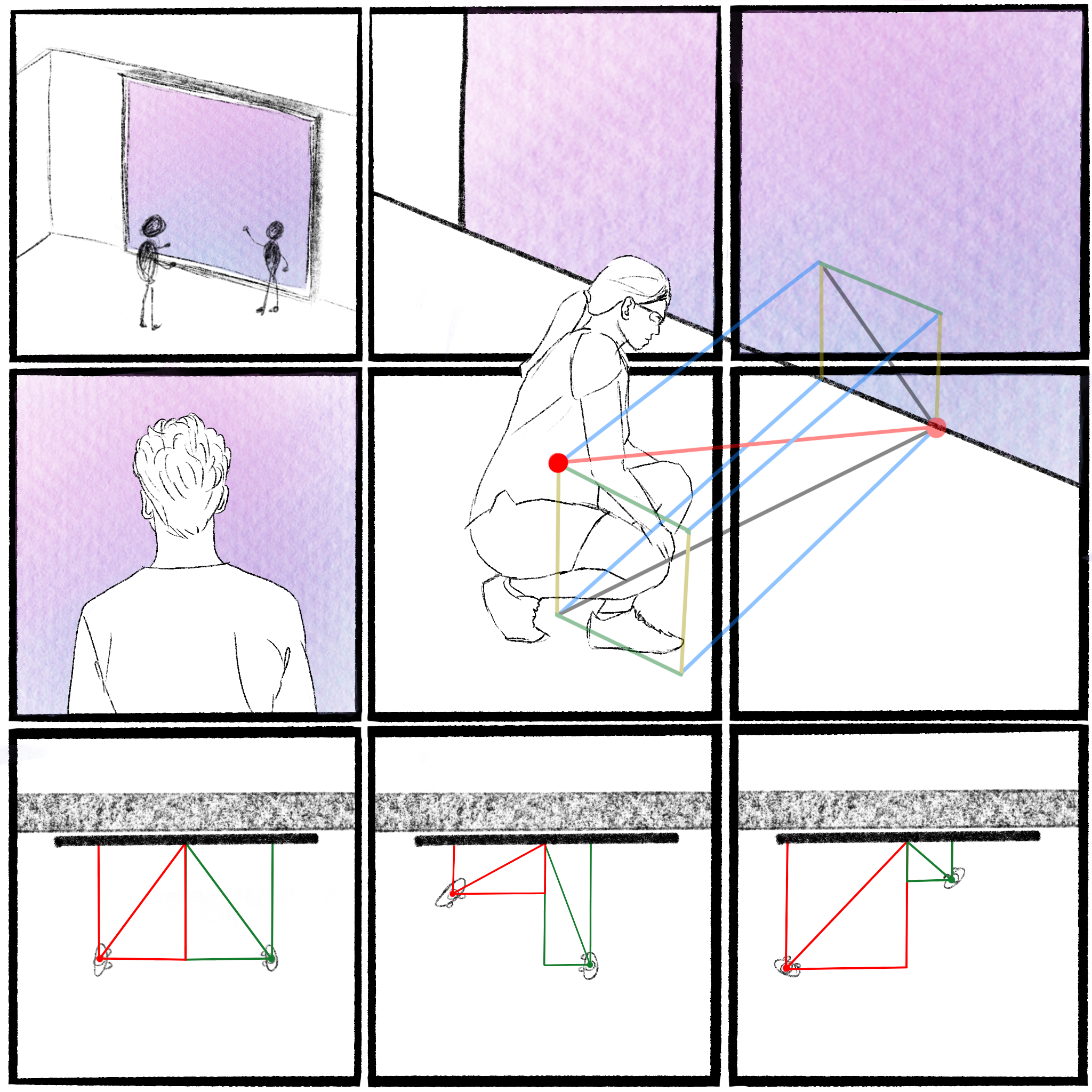

For the first test, I used an Azure Kinect, ml5.js, p5.js, and tone.js to create a generative piece whose visuals, based on a sketch I did earlier in the process, and sound were affected by user interaction.

Consequently, I focused on refining the experience of the system by adding interactions, and connecting different gestures, visuals, and sounds. I observed gestures that I needed to fine tune - the jumping was not very instinctual, and I needed to better interlink the three main outputs - the lines, circles, and notes.

I started to refine the visual output to be more organic and respond to movement instead of just position; something that users could have fun engaging with. Now I was able to observe behaviour better and noted that people instinctively expected a response to gestures like moving their limbs.

As it started to come together, the prototype illicit almost dance-like reactions.

The Final Project

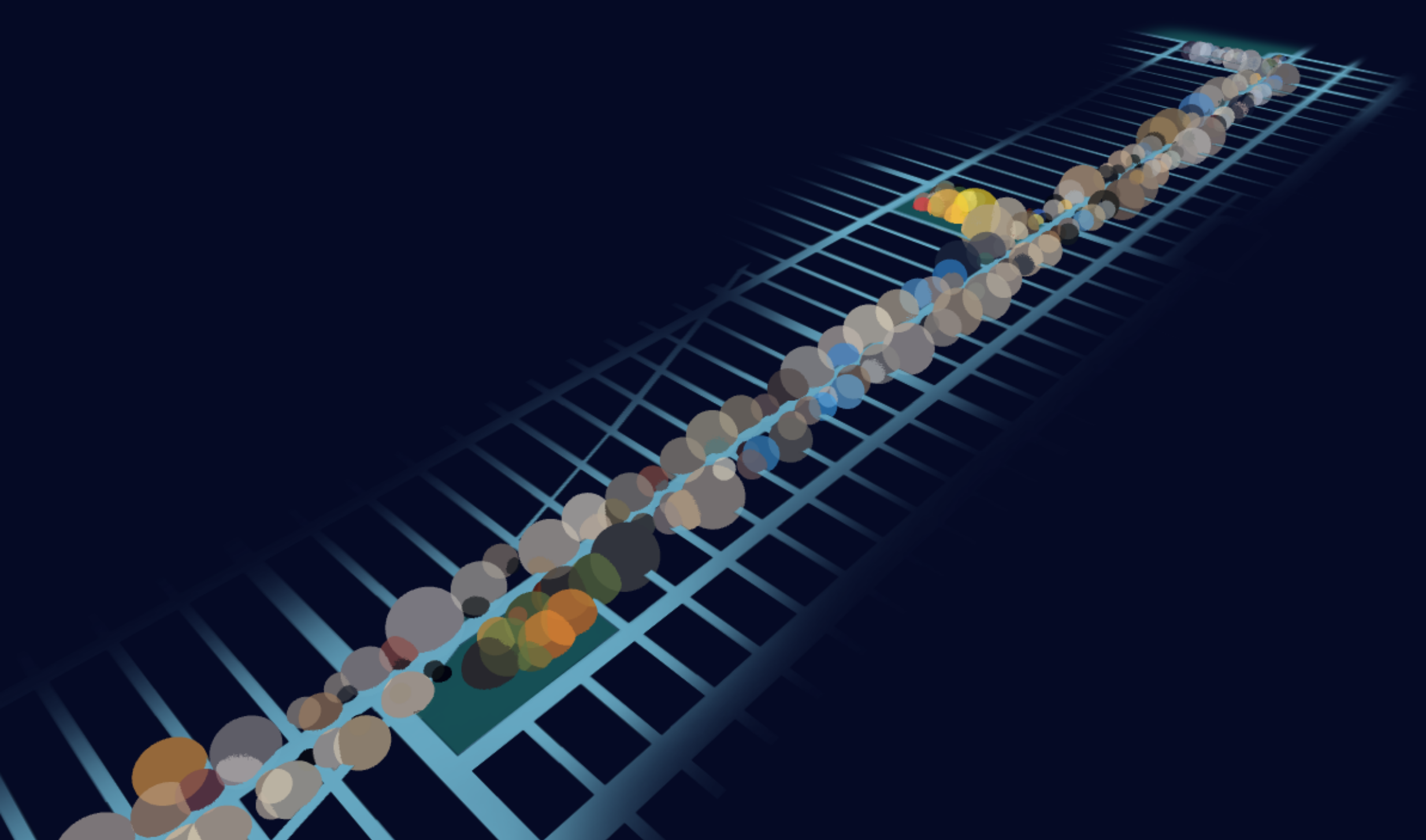

I went through many trains of thought in the development of this project - interactivity, artistry, engagement, visuals and sound. The project is an exploration of creation and the sense we get when engaging in the act.

I want this project to illustrate how the most important aspects of creation lie in the act of engaging in it, not the production of a measurable output. This project is an art piece that only exists when a user is interacting with it. There is no finished product that the user can record and reflect on - the art that users create when interacting with it is only present when the interaction is happening; it is unique and cannot be replicated.

How it works

The system works by using a Microsoft Azure Kinect to capture the user in the space. The video is then fed to ml5.js, which processes images and detects different body parts in human poses. Different parts - shoulders, nose, arms, face - are then used to alter parameters of a generative art piece in real time.

The art itself is built with p5.js (for visuals) and tone.js (for audio). Moving visuals are controlled by the position, speed, and pose of a user. Different musical notes play based on where and how the user moves in the space.

Next Steps

Moving forward, there are more things I would like to refine for this project. There are more gestures and behaviours I can map, which will lead to a feedback loop of more refined output - both visual and auditory.

A key factor I would like to introduce is introducing more than one person at a time into this system. I would like to observe interactions between them and see how it affects the way they behave in the space, and refine the outputs in return.

MORE PROJECTS